Google plans to combine its Gemini and Veo models into a single, multimodal system

Google is gearing up to unify its AI efforts, with plans to combine its Gemini and Veo models into a single, multimodal system, according to DeepMind CEO Demis Hassabis.

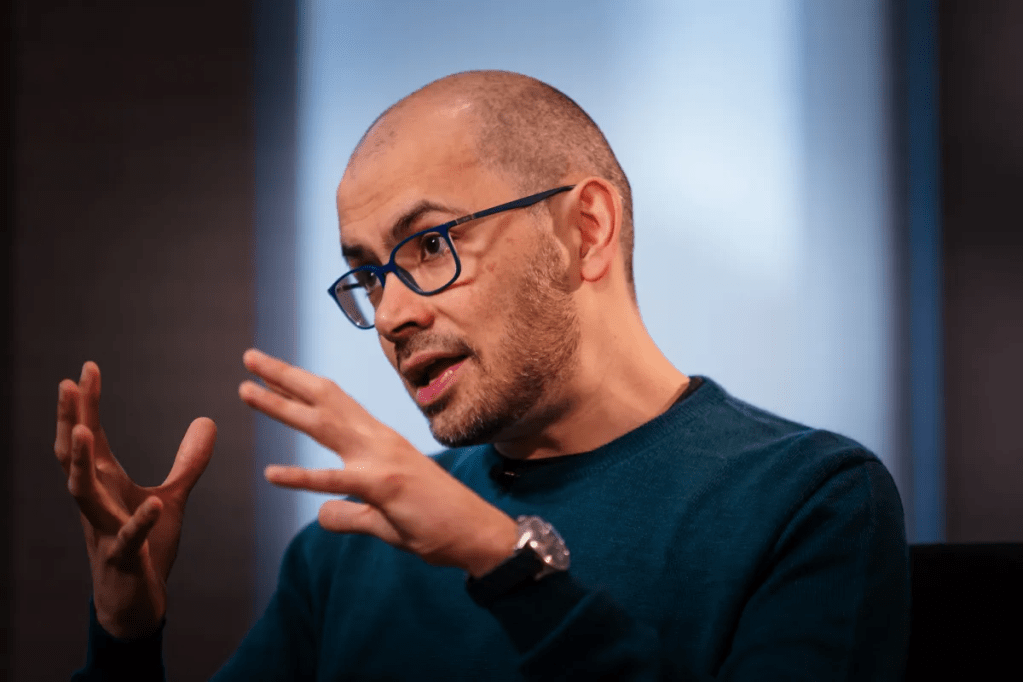

In a recent episode of the Possible podcast, co-hosted by LinkedIn co-founder Reid Hoffman, Hassabis revealed that the tech giant aims to merge its flagship large language model Gemini with Veo, its video-generating AI, to enhance the system’s grasp of real-world physics and environments.

“We’ve always built Gemini, our foundation model, to be multimodal from the beginning,” Hassabis said. “The reason we did that is because we have a vision for this idea of a universal digital assistant — an assistant that actually helps you in the real world.”

The move reflects a broader trend in the AI industry toward “omnimodal” or “any-to-any” models — systems capable of processing and synthesizing diverse forms of media, including text, images, audio, and video. Google’s latest Gemini models already support audio, image, and text generation. Competitors are making similar strides: OpenAI’s ChatGPT can now generate images natively, while Amazon has announced plans to launch an all-purpose AI model later this year.

These powerful multimodal models require vast amounts of training data. Hassabis hinted that a significant portion of the data powering Veo, particularly for learning real-world physics, comes from YouTube — a platform owned by Google.

“Basically, by watching YouTube videos — a lot of YouTube videos — [Veo 2] can figure out, you know, the physics of the world,” he explained.

While Google has been tight-lipped about specifics, it previously told TechCrunch that its models “may be” trained on “some” YouTube content, in accordance with its agreements with creators. Notably, Google updated its terms of service last year, reportedly to expand its ability to use YouTube content for AI training.

As AI capabilities evolve, the convergence of models like Gemini and Veo points to an ambitious future where digital assistants not only understand language but also interpret and interact with the physical world.