Revolutionizing Robotics with Local AI Processing

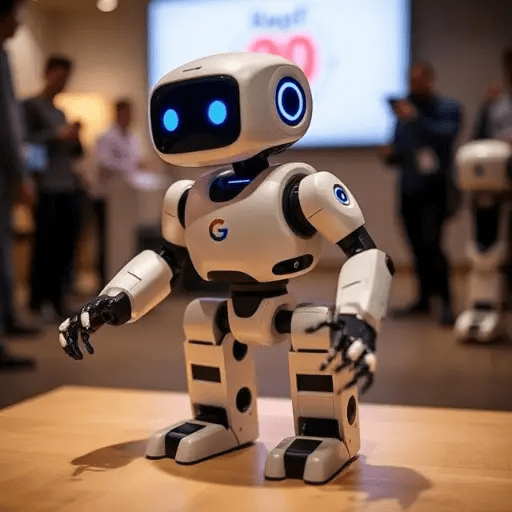

Google DeepMind has made a significant breakthrough in the field of robotics with the release of Gemini Robotics On-Device, a new language model that enables robots to perform tasks locally without requiring an internet connection. This innovative technology builds upon the company’s previous Gemini Robotics model, which was introduced in March, and allows developers to control and fine-tune the model using natural language prompts.

According to Google, the Gemini Robotics On-Device model performs at a level comparable to its cloud-based counterpart, while outperforming other on-device models in general benchmarks. The company showcased the model’s capabilities through a demo, where robots successfully performed tasks such as unzipping bags and folding clothes. These tasks require a high level of dexterity and manipulation, demonstrating the model’s ability to generalize and adapt to complex scenarios.

The Gemini Robotics On-Device model was trained on ALOHA robots and later adapted to work on a bi-arm Franka FR3 robot and the Apollo humanoid robot by Apptronik. Google claims that the bi-arm Franka FR3 robot was able to tackle complex scenarios and objects it had not “seen” before, including assembly on an industrial belt. This achievement highlights the model’s ability to learn from experience and adapt to new situations, a key aspect of human intelligence.

To facilitate the development of this technology, Google is releasing a Gemini Robotics SDK, which allows developers to train robots on new tasks using the MuJoCo physics simulator. The MuJoCo simulator is a powerful tool for simulating complex robotic systems and environments, enabling developers to test and refine their models in a virtual environment before deploying them on real robots. The Gemini Robotics SDK also includes tools for fine-tuning the model’s parameters and optimizing its performance for specific tasks.

The release of Gemini Robotics On-Device marks a significant milestone in the field of robotics, as other AI model developers, such as Nvidia and Hugging Face, are also exploring the potential of robotics. This trend is expected to drive innovation and advancements in the field, with potential applications in various industries, including manufacturing, healthcare, and logistics.

According to Google, the Gemini Robotics On-Device model uses a combination of natural language processing (NLP) and computer vision to understand and interpret the world around it. The model is trained on a large dataset of text and images, which enables it to learn patterns and relationships between different objects and actions. This allows the model to generalize and adapt to new situations, making it a powerful tool for robotics and other applications.

The Gemini Robotics On-Device model also incorporates a number of advanced technologies, including:

Reinforcement learning: The model uses reinforcement learning to learn from experience and adapt to new situations. This involves training the model to perform tasks and receiving rewards or penalties based on its performance.

Transfer learning: The model uses transfer learning to adapt to new tasks and environments. This involves pre-training the model on a large dataset and then fine-tuning it on a smaller dataset specific to the task at hand.

Attention mechanisms: The model uses attention mechanisms to focus on specific parts of the input data and ignore irrelevant information. This enables the model to process complex inputs and make accurate predictions.

The release of Gemini Robotics On-Device marks a significant step forward in the development of AI-powered robots, and has the potential to revolutionize a wide range of industries and applications.